How does artificial intelligence affect the environment?

Artificial intelligence has become deeply woven into the fabric of modern life, powering everything from smartphone assistants to complex medical diagnostics. Yet beneath the surface of this technological transformation lies a growing environmental concern that many teams underestimate. The AI environmental impact extends far beyond the sleek interfaces we interact with daily, reaching into massive data centers that consume significant amounts of energy. As artificial intelligence energy consumption surges alongside expanding capabilities, understanding this relationship matters for leaders who want innovation without environmental blind spots.

Here is the paradox. These systems promise to optimize processes and solve sustainability challenges, yet their own environmental cost of AI raises serious questions. Training a single large model has been estimated to emit as much carbon as several cars over their lifetimes, while continuous operation of AI infrastructure demands round-the-clock power. The rapid proliferation of use cases means the impact multiplies across sectors. The responsible path forward requires sustainable AI practices and green AI technology, designed to deliver measurable AI carbon footprint reduction without slowing down execution.

This article examines the multifaceted environmental implications of AI and provides pragmatic strategies to reduce energy, carbon, and resource usage while maintaining delivery speed in enterprise settings.

Want a faster path to sustainable automation? Explore Smart AI solutions that cut energy use and carbon while maintaining delivery speed.

Ready to implement sustainable AI solutions?

Moving from intent to impact starts with a structured operating model. First, define a baseline for energy, water, and carbon tied to specific training and inference workloads. Next, review architecture choices against environmental constraints and business priorities. Then, phase optimizations and governance into your roadmap so improvements persist over time. For example, a mid-market logistics company that rebalanced batch inference to lower-carbon regions, reduced model size by sixty percent, and scheduled compute for renewable peaks cut operational emissions by more than thirty percent in one quarter while improving performance service levels.

Expert guidance can accelerate this journey by aligning automation opportunities with sustainability goals. That includes identifying high-return-on-investment use cases, right-sizing models, coordinating infrastructure commitments with procurement, and building dashboards that expose real-time cost and carbon signals to decision makers.

The energy footprint of AI systems

The artificial intelligence energy consumption challenge is one of the most pressing issues in modern technology deployment. When researchers train advanced models, computational requirements translate into electricity demands that can rival small cities. A single training cycle for state-of-the-art language models can produce carbon emissions comparable to several automobiles operating throughout their lifetimes. This environmental cost of AI goes beyond isolated incidents. Data centers supporting AI workloads account for an estimated one to two percent of global electricity consumption, with growth expected as adoption accelerates in enterprise settings.

The computational intensity stems from massive matrix calculations, neural network propagation through deep layers, and repeated model refinement. Enterprise deployments face compounding energy demands across infrastructure dimensions. Server compute draws baseline power for processing, while sophisticated cooling systems require additional electricity to manage the heat generated by densely packed hardware. Network data transfer adds overhead as models access training datasets and serve predictions. Storage systems require persistent power to maintain vast repositories. Backup and redundancy duplicate these requirements to ensure business continuity. Consider a financial services organization with fraud detection models delivered across multiple regions. It must maintain twenty-four seven operations to meet risk and compliance obligations, meaning every component contributes to the cumulative footprint that responsible leaders must manage through sustainable AI practices implementation strategies.

Training vs inference energy costs

Training is an intense burst of compute where algorithms process massive datasets repeatedly to learn patterns. This can require days or weeks depending on model complexity. Inference is different. Once deployed, models generate predictions continuously in production, creating ongoing energy consumption. A customer service assistant may require months of training but will then serve millions of daily interactions with modest per-query energy usage. The business opportunity lies in optimizing the ratio: right-size training and heavily optimize inference. That balance lowers environmental impact while preserving service quality.

Hardware acceleration impact

Specialized chips such as graphics processing units and tensor processing units deliver dramatic throughput gains yet increase power density inside data centers. These accelerators handle parallel computations more efficiently than general processors, but their concentrated energy consumption introduces thermal challenges and drives overall facility power higher. Selecting efficient hardware architectures is a pivotal decision where green AI technology meets operational cost. Newer chip generations often improve computational performance per watt, but upgrades also generate electronic waste as older equipment is replaced. A responsible plan accounts for both efficiency improvements and equipment lifecycle impacts.

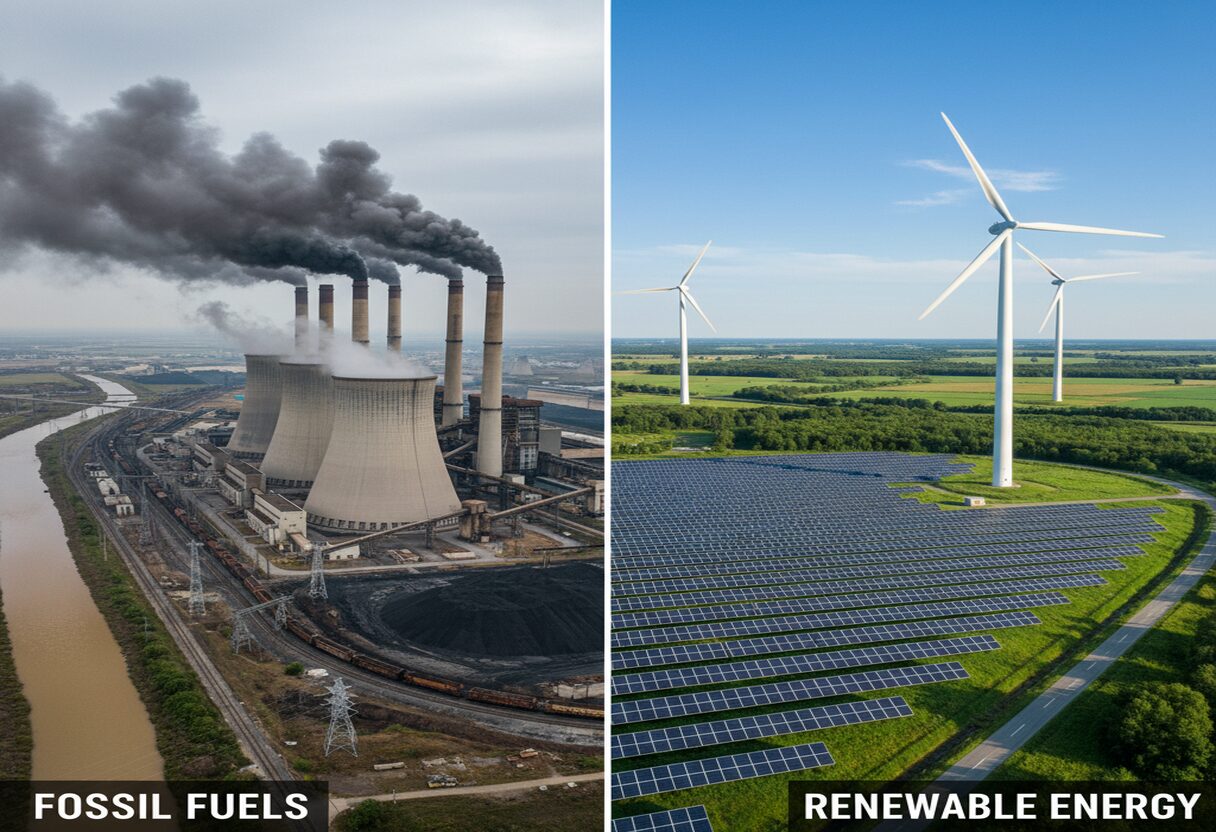

Carbon emissions and climate impact

The AI carbon footprint reduction goal extends beyond electricity volume because carbon intensity varies dramatically by region. Workloads on fossil-heavy grids generate far higher emissions than identical operations powered by renewables. Two identical training runs can have very different climate impacts simply based on location. A single large training cycle in a carbon-intensive region can produce hundreds of thousands of pounds of carbon dioxide. Location matters, and so do time-of-day patterns. Organizations pursuing eco-friendly automation should assess not only the size of workloads but also where and when they run.

There is also a counterweight. The same technology that consumes energy can deliver strong sustainability outcomes when applied wisely. For instance, advanced models support AI environmental monitoring and climate modeling solutions that strengthen early warning systems, optimize resource use, and inform policy. To make an informed decision, leaders should weigh benefits against costs across the entire lifecycle, not just the training event. Practical evaluation criteria include:

- Regional electricity grid carbon intensity and fossil fuel dependency

- Data center renewable sourcing and verified power purchase agreements

- Cloud provider sustainability commitments and transparent reporting

- Hardware lifecycle emissions from manufacturing through end-of-life

- Cooling infrastructure impacts including water consumption and refrigerants

Regional variations in AI carbon intensity

AI workloads in regions with significant renewable penetration produce dramatically lower emissions than those in fossil-fuel-dependent areas. A training run in a grid dominated by geothermal and hydroelectric sources will generate a fraction of the carbon compared to coal-reliant regions. Strategic geographic distribution of compute is one of the most effective ways to cut emissions without reducing capability. A manufacturing firm can route batch jobs to renewable-rich regions during off-peak hours, combining operational efficiency with environmental stewardship.

Offset strategies and carbon neutrality

Mature organizations implement carbon offsets, renewable energy credits, and direct investments to neutralize AI-related emissions while advancing toward net zero commitments. These green AI technology initiatives may include funding reforestation, supporting community energy projects, or investing in on-site generation that exceeds consumption. More importantly, they pair offsets with reductions from architecture decisions, efficiency improvements, and workload scheduling so that neutrality is not achieved through offsets alone.

Resource consumption and electronic waste

Artificial intelligence affects more than electricity. Data centers rely on substantial water resources for cooling systems, and that can create tension with community needs in water-scarce regions. Semiconductor manufacturing for specialized chips requires ultra-pure water in large quantities and generates hazardous waste that needs careful treatment. Rapid hardware cycles also create growing waste streams as organizations upgrade to sustain competitive performance.

The full lifecycle burden spans manufacturing emissions for components, operational resource use, and complex end-of-life disposal. Circular economy approaches remain underdeveloped for specialized computing assets. For instance, when a cloud provider refreshes its fleet, thousands of servers can be retired at once, overwhelming recycling capacity. Businesses implementing green AI technology procurement strategies should evaluate the full resource footprint at the purchase decision, and pre-plan responsible disposition and refurbishment to capture residual value rather than externalize costs.

Water usage in AI infrastructure

Large facilities use evaporative and liquid cooling. This consumes significant water to maintain optimal chip temperatures. In dry regions, industrial water use for cooling can collide with agricultural and municipal needs. Selecting data center locations and cooling technologies should therefore be an environmental decision as much as a financial one. Heat reuse for district heating and closed-loop liquid cooling can meaningfully reduce impact and create community benefits.

Hardware lifecycle management

Extending hardware life through selective component upgrades and tiered workload assignment reduces environmental impact while preserving performance. For example, assign older servers to lightweight inference at the edge and reserve the newest accelerators for training. Build responsible recycling programs that recover precious metals and rare earth elements from retired equipment. Today, recovery rates remain low due to technical complexity and economics. This is a frontier where procurement strategy and sustainability strategy become the same conversation.

Sustainable AI implementation strategies

There is a clear path to reduce AI environmental impact without losing business momentum. Model optimization can cut compute and memory needs while preserving accuracy. Pruning removes redundant parameters that contribute little to outcomes yet consume energy. Quantization lowers numerical precision, which reduces memory bandwidth and power draw. Knowledge distillation transfers learning from a large teacher model to a smaller student model, enabling lighter deployments that maintain accuracy for the use case. These sustainable AI practices, applied with discipline, make AI carbon footprint reduction real and repeatable.

Prioritize measurable levers: right-size models, route workloads to cleaner regions, and optimize inference to reduce energy at scale without sacrificing service levels.

Architecture choices matter too. Edge computing processes data closer to the source, which reduces network energy and latency. A retail chain can run computer vision locally in stores rather than streaming video to the cloud, achieving the same business result with lower bandwidth and energy. If you are evaluating edge rollouts, compare hardware and software stacks and test for energy per inference, not just accuracy. For deeper evaluation of deployment options, this guide to the best edge platform for AI inference efficiency can help shape a short list.

Selecting cloud regions with demonstrated renewable energy commitments improves the carbon profile of workloads. In addition, aligning compute with renewable generation windows strengthens the effect. Intelligent scheduling frameworks can shift non-urgent batch jobs to coincide with wind or solar peaks. Recent research on energy-aware scheduling shows how to coordinate workload timing with grid conditions. For technical background, see AI workload scheduling renewable energy optimization approaches that balance energy efficiency and cost.

Model efficiency optimization

Modern optimization can reduce model size and computation by up to ninety percent while maintaining accuracy that is sufficient for the business goal. The principle is simple. Build the smallest model that still meets the objective, and prefer reuse of pre-trained assets over training from scratch. By right-sizing models, you reduce both training and inference energy. For teams that must serve millions of inferences per day, this is often the single largest lever for sustainable scale.

Green cloud provider selection

Not all data center regions are equal. Seek providers that disclose renewable energy percentages, carbon intensity by region, and power usage effectiveness. Verify that carbon-neutral or carbon-free regions are not marketing claims but backed by credible contracts and third-party assurance. When procurement criteria elevate sustainability alongside cost and latency, the environmental cost of AI falls without sacrificing performance.

Renewable energy integration

Align batch processing with renewable availability. Schedule long-running training during wind-rich overnight windows or solar peaks in the afternoon. Combine this with autoscaling policies that release idle resources quickly. When engineering and operations teams work from a shared playbook that includes carbon-aware scheduling, green AI technology becomes a daily practice rather than an annual initiative.

Governance, measurement, and reporting

You cannot improve what you do not measure. Build dashboards that surface energy use, carbon intensity, and water signals at the workload level. Expose the metrics to product owners and technology leadership, not just sustainability teams. Useful indicators include energy per training epoch, energy per inference, carbon intensity per region and time window, and water intensity per megawatt hour in the data center. Then set thresholds for new deployments so every new service ships with a sustainability budget as well as a latency and cost budget.

Practical next steps for technology leaders and operations teams include:

- Establish a baseline for training and inference energy, carbon, and water by workload

- Adopt a policy for model right-sizing and re-use before training from scratch

- Set region selection standards based on renewable percentages and verified reporting

- Introduce carbon-aware scheduling for batch jobs and model retraining

- Plan hardware lifecycle management with refurbishment and certified recycling

AI as an environmental solution

Artificial intelligence can also be a force multiplier for sustainability when applied to high-impact problems. Grid operators use forecasting to predict demand and balance loads across generation sources, cutting waste in transmission and distribution. Renewable energy forecasting improves the integration of intermittent solar and wind. Smart building management reduces heating, ventilation, and air conditioning as well as lighting energy by double-digit percentages through continuous optimization that responds to occupancy and weather. Supply chain optimization reduces transportation emissions through better routing, consolidation, and demand planning. The net AI environmental impact depends on focusing deployments where savings significantly exceed the cost of running the models.

Environmental monitoring applications detect deforestation from satellite imagery, predict severe weather by processing large meteorological datasets, and identify pollution sources through sensor networks. In food systems, precision agriculture reduces water usage, pesticide application, and fertilizer waste with model-driven crop monitoring that detects stress, pests, and nutrient deficiencies at plant level. A large-scale farming operation using precision techniques can reduce chemical inputs while maintaining yields. When AI is deployed for genuine operational efficiency, energy reductions often offset the system’s own consumption and create net-positive outcomes. For background on the science of monitoring, see peer-reviewed work on AI environmental monitoring and climate modeling solutions and consider how similar approaches can be adapted to your sector.

Industrial process optimization

Manufacturing, logistics, and utilities hold some of the largest opportunities. Machine learning can uncover subtle patterns in sensor data that reveal equipment drift, energy waste in production sequences, and material inefficiencies. Targeted improvements reduce energy consumption and scrap while increasing throughput. In one common scenario, re-sequencing batch processes to minimize peak energy draw and applying predictive maintenance to high-load equipment yields immediate cost and carbon benefits with little change to operator workflows.

Climate modeling and environmental protection

Advanced models process massive environmental datasets spanning atmospheric conditions, ocean temperatures, ice sheet behavior, and ecosystem indicators to predict climate trends at useful granularity. These insights enable proactive conservation strategies, early warnings for extreme events, and targeted policy interventions. They also support private sector planning, such as climate risk assessments for facilities, supply routes, or critical infrastructure. When organizations align sustainability goals with decision-grade analytics, investment in AI becomes an asset for planetary stewardship.

The relationship between artificial intelligence and environmental sustainability is a genuine paradox that requires strategy, not slogans. The AI environmental impact includes significant artificial intelligence energy consumption, carbon emissions from training and inference, water use for cooling, and electronic waste from fast hardware cycles. At the same time, industrial optimization, climate modeling, renewable integration, and precision resource management can deliver environmental benefits that outweigh the costs when teams design for impact. The throughline is clear. Sustainable AI practices such as model efficiency, carbon-aware region and provider selection, renewable alignment, and workload distribution prove that environmental responsibility and technology advancement can coexist.

What happens next depends on coordinated governance, procurement, and engineering. Build shared metrics, align incentives, and iterate with carbon-aware playbooks for durable impact.

What happens next depends on the choices leaders make now. Organizations that ground AI sustainability strategies in clear metrics, lifecycle assessments, and measurable business value will achieve AI carbon footprint reduction and keep their advantage. As green AI technology matures and eco-friendly automation becomes everyday practice, artificial intelligence can shift from environmental liability to a tool for protecting shared resources. The answer is not to abandon innovation, but to deploy it intentionally.

Need a pragmatic plan for sustainable AI? Get results with AI consultant services that align efficiency and impact.

In conclusion,

AI’s environmental impact spans energy, carbon, water, and e-waste, but disciplined design can turn that footprint into net value. Baselines, right-sized models, cleaner regions, and carbon-aware scheduling enable measurable reductions without slowing delivery.

As sustainability tooling and standards mature, organizations that embed them early will capture advantage and accelerate responsible innovation.

Launch sustainable AI with confidence

Assess your footprint, right-size models, and deploy carbon-aware workloads. Our experts align engineering, procurement, and governance to deliver measurable reductions without slowing product velocity.

FAQ

How much energy does AI actually consume compared to other technologies?

Training large models can consume as much electricity as one hundred households in the United States use annually, which is significant for a single system. Inference is far more efficient on a per-query basis, and total artificial intelligence energy consumption remains below two percent of global electricity use in 2025. This share is growing quickly with adoption, especially as generative applications expand. Compared to traditional data center workloads, AI operations have higher power density and computational intensity, which creates localized environmental challenges even when aggregate consumption appears modest relative to the global total.

Can businesses reduce AI environmental impact without sacrificing performance?

Yes. Pruning redundant parameters, using lower-precision arithmetic with quantization, and right-sizing model architectures can reduce computation by seventy to ninety percent while retaining roughly ninety-five percent accuracy for many business tasks. Pair these measures with edge deployments where sensible, select cleaner regions, and schedule workloads during renewable peaks. Together, these sustainable AI practices demonstrate that operational excellence and environmental responsibility can reinforce one another rather than compete.

Which AI applications offer the best environmental return on investment?

Process optimization, energy management, and supply chain efficiency often provide environmental benefits that exceed their own consumption by cutting waste and improving utilization. Smart buildings can reduce heating, ventilation, and air conditioning and lighting energy by twenty to thirty percent. Renewable grid optimization enables greater adoption of clean energy. Precision agriculture can reduce water and chemical inputs while sustaining yields. Logistics improvements that shrink empty miles reduce transportation emissions. Focus on use cases with a clear path to measured savings.

How do different cloud providers compare on AI sustainability?

Large providers vary in renewable energy commitments, transparency of sustainability reporting, and energy sourcing by region. The most effective strategy is to evaluate specific regions for renewable share, carbon intensity, and power usage effectiveness, and to confirm claims through verified disclosures. Nordic and select Canadian regions often benefit from hydroelectric resources. Certain regions in the United States provide strong wind availability. Choose locations and providers based on independently reported data rather than brand marketing.

What regulations address AI environmental impact?

Regulation is evolving. The European Union Artificial Intelligence Act introduces environmental considerations for high-risk systems, including impact assessment expectations. Multiple jurisdictions are developing data center efficiency standards that affect AI operations indirectly. Transparency requirements for reporting artificial intelligence energy consumption and carbon emissions are being discussed in several regions. For now, many organizations rely on voluntary sustainability commitments, investor expectations, and industry frameworks while anticipating more structured rules as measurement methods become standardized.

Share this article

Share this article on your social networks